Kubernetes is a powerful tool that helps businesses deploy, scale and manage containerized applications more efficiently and reliably. It is becoming a popular choice for businesses of all sizes and it is likely to become even more important in the future. By adopting Kubernetes, businesses can expect improvement in their agility, scalability, reliability, security and cost-efficiency. This can eventually lead to increased profits and customer satisfaction.

What is Kubernetes cluster management?

A Kubernetes cluster is like a group of computers that work together to run special types of programs called containers. These containers are like packages that contain all the things a program needs to run, like code and files. The cluster can be made up of real computers or virtual ones in the cloud.

The cluster is responsible for scheduling and running the containers. Moreover, it is also responsible for managing the resources that the containers use. When you set up a Kubernetes cluster, you define how you want things to be. You can instruct which programs you should be running, how many copies of each program you want and other details like how much memory or storage each program should have.

Kubernetes will automatically manage the cluster to match the desired state. For example, if you deploy an application with a desired state of “3,” Kubernetes will start 3 replicas of the application. If one of those replicas crashes, Kubernetes will start a new one to replace it.

Why is Kubernetes cluster management crucial?

Let’s understand the importance of the Kubernetes cluster with an example:

Imagine you are the owner of a popular online shopping platform, and you want to ensure your website runs smoothly and can handle many customer requests. To achieve this, you decide to use Kubernetes to manage your application infrastructure.

You can manage your Kubernetes clusters across multiple environments. Kubernetes can be used to manage clusters that are hosted in different locations, such as on-premises, in the cloud, or at the edge.

First, you set up a Kubernetes cluster, which consists of several nodes (computers or virtual machines) working together.

In your cluster, each node has its own resources, such as CPU, memory and storage capacity.

- Node A has 4 CPUs and 8 GB of memory,

- Node B has 2 CPUs and 4 GB of memory and

- Node C has 3 CPUs and 6 GB of memory.

These nodes form the backbone of your cluster and provide computational power to run your application.

Next, you deploy your online shopping application to the Kubernetes cluster, specifying the number of instances (or replicas) of your application.

Kubernetes automatically distributes these replicas across the available 3 nodes to ensure optimal resource utilization.

This distribution allows your application to take advantage of the combined resources of the cluster, ensuring scalability and fault tolerance. If a node becomes overloaded or fails, Kubernetes automatically reschedules the affected replicas to other healthy nodes, maintaining the availability of your application.

As customer traffic surges, Kubernetes detects Node B’s high CPU usage and automatically scales the application by adding a replica. The new replica will be deployed on Node A, utilizing its available resources.

Once set up, your Kubernetes clusters can be deployed, scaled, and managed automatically. This can save you a lot of time and effort and it can also help to ensure that your clusters are always running smoothly.

You currently have two replicas running on Node A, one on Node B and one on Node C. This dynamic scaling ensures that your application can handle the increased load and provides a seamless experience for your customers.

Ensure the availability and reliability of your applications. Kubernetes can help you to keep your applications running even if one or more nodes in your cluster fail.

With Kubernetes, you can perform rolling updates to your online shopping application, introducing new features without disrupting customer access. Updates apply to one replica at a time, ensuring continuous availability.

As your business expands, Kubernetes seamlessly integrates additional worker nodes into the cluster, expanding capacity and enabling further scalability.

Kubernetes also provides several security features that can help you protect your applications from attack.

Discover how our implementation of the Kubernetes platform has revolutionized the operations of a smart device manufacturing company.

Best practices for Kubernetes

Kubernetes is a powerful tool for managing containerized applications. However, it can also be expensive to run. Here are some cost-saving best practices for Kubernetes:

Right-size your cloud instances and application resources

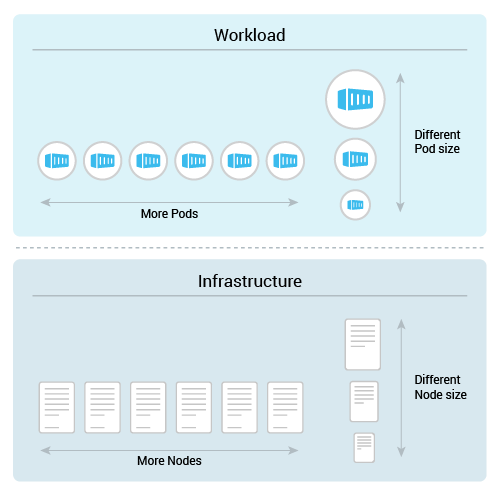

Cost-optimized Kubernetes applications heavily rely on autoscaling. Autoscaling saves costs by:

1) making workloads and their underlying infrastructure start before demand increases and

2) shutting them down when demand decreases.

Best practices for enabling autoscaling include setting appropriate resource requests and limits, configuring target utilization thresholds, ensuring quick application startup and shutdown, and using meaningful readiness and liveness probes.

Avoid overprovisioning your cloud instances or application resources. You can consider using tools like Kustomize to help you determine the optimal size and number of resources for your applications.

Use Kubernetes multi-tenancy. Kubernetes multi-tenancy allows you to share a single Kubernetes cluster among multiple teams or applications. This can help you save costs by reducing the number of Kubernetes clusters that you need to run.

Implement granular Kubernetes cost visibility and monitoring. This will help you track your Kubernetes costs and identify areas where you can save money. You can use a tool like CloudZero to help you with this.

To keep track of your clusters and follow best practices, start by using the Monitoring Dashboard. It shows you valuable time-series data about cluster usage, covering infrastructure, workloads (overall allocated versus requested information) and services.

Set cost optimization policies. These policies can help you automate cost savings measures, such as scaling down your Kubernetes cluster when it is not in use.

Anthos Policy Controller (APC) is like a watchful guardian for your Kubernetes clusters. It checks and makes sure your clusters follow important rules related to security, regulations and other business requirements. Think of it as a rule enforcer.

You can set up constraints with APC to enforce these rules. For example, you can define best practices for your Kubernetes applications, and if a deployment doesn’t follow these practices, it won’t be allowed. This helps prevent unexpected cost increases and keeps your workloads stable when they scale up or down.

Reduce operational overhead in managing Kubernetes infrastructure. This can help you to save money by reducing the amount of time and effort that you need to spend on managing your Kubernetes infrastructure. You can use a tool like Kops to help you with this.

When cost is a constraint, where you run your managed Kubernetes clusters matters. Due to various factors, cost varies per computing region. Opt for the most affordable option without compromising latency on the customer’s end. If your workload involves data transfer between regions, consider the associated expense of moving this data.

Here are some additional tips for cost saving:

- Use spot instances. Spot instances are unused cloud instances that are offered at a discounted price. You can use spot instances to run your Kubernetes workloads when demand is low.

- Use preemptible instances. These cloud instances can be terminated at any time. You can use preemptible instances to run your Kubernetes workloads when you are not expecting high levels of traffic.

- Use load balancing. Load balancing can help you distribute traffic across multiple Kubernetes nodes. This can help you to reduce the number of resources that each node needs.

- Use caching. Caching can help you reduce the number of requests that your Kubernetes workloads make to external services. This can help you save money on bandwidth and latency.

Preemptible Virtual Machines (PVMs) are short-lived instances with no availability guarantees, lasting up to 24 hours. They are much cheaper, up to 80%, compared to regular VMs. PVMs work best for jobs that can handle interruptions and don’t require constant availability, like batch tasks or fault-tolerant jobs.

Step up: Streamline Kubernetes management

By embracing these cost-saving best practices for Kubernetes, you can effectively leverage its capabilities and achieve significant reductions in expenditure. As businesses continue to scale and evolve in the dynamic digital landscape, these cost-saving measures can make all the difference in achieving long-term success and staying ahead of the competition. Embracing Kubernetes’ best practices is not only a smart financial decision but also a strategic move toward building a resilient, agile and cost-effective ecosystem. By teaming up with experts for a managed Kubernetes service, businesses can direct their focus towards their applications, while leaving infrastructure management in capable hands.