As we explored in Part 1, understanding artificial intelligence (AI) hallucinations and their implications is crucial for developing reliable AI systems. We covered the basics of what AI hallucinations are, their historical context, potential consequences, and some real-world examples. In this part, we will delve deeper into the various types of AI hallucinations, their causes, and the solutions to mitigate them.

The phenomenon of generative AI hallucinations presents a significant challenge in the advancement of artificial intelligence, as these inaccuracies can lead to unreliable outputs and erode trust in AI systems. Recognizing and addressing these hallucinations is essential for creating AI that is effective and trustworthy. Companies that prioritize responsible AI practices are better positioned to leverage AI’s full potential while mitigating associated risks.

The complexities of AI hallucinations require a multi-faceted approach. Identifying and understanding the different types of hallucinations can help in developing targeted strategies to prevent them. The root causes of these hallucinations often lie in the quality of training data, the methodologies used in model training, and the AI’s ability to interpret context accurately.

Solving these challenges involves a combination of technical solutions and human oversight, which have been described in detail in this blog. Engaging AI consultants who specialize in addressing these issues can provide tailored solutions that enhance AI performance and reliability.

Building trust in AI systems is not just about fixing immediate issues but also about establishing a culture of continuous improvement and ethical considerations. This includes regular updates to training data, refining error detection and correction methods, and adapting to new challenges and scenarios. By fostering a responsible AI ecosystem, businesses can ensure that their AI systems are innovative, reliable, and trustworthy, ultimately driving greater value and confidence among stakeholders.

Join us in Part 2 as we continue to explore the intricacies of AI hallucinations, the steps to prevent and fix them, and how to build a forward-looking perspective that integrates robust AI practices with ethical considerations. By understanding and addressing these challenges, we can unlock AI’s transformative potential while safeguarding against its pitfalls.

Types of AI hallucinations

AI hallucinations, which can manifest in various forms, often unexpectedly, from minor inconsistencies to completely fabricated or contradictory information. Here are some types of AI hallucinations:

Data hallucinations: Generating data that is not present in the training dataset.

- Prompt: List three elements of the periodic table.

- Output: Hydrogen, Helium, and Unicornium.

Contextual hallucinations: Generating outputs that are not supported by the input context.

- Prompt: Describe the process of photosynthesis.

- Output: Plants use sunlight to make food. Photosynthesis occurs in darkness.

Semantic hallucinations: Generating outputs that are semantically incorrect or unrelated to the input.

- Prompt: Explain the theory of relativity.

- Output: Apples are red, and bananas are yellow.

Visual hallucinations: Generating images or videos that are not present in the input data.

- Prompt: Show me a picture of the Eiffel Tower.

- Output: Here is a picture, (followed by an image of the Statue of Liberty).

Prompt contradiction hallucinations: AI produces a response that contradicts the intent of the initial prompt provided.

- Prompt: Write a recipe for chocolate cake.

- Output: Here’s a poem about the ocean waves.

Irrelevant or random hallucinations: AI generates information that is irrelevant or random.

- Prompt: Describe the history of ancient Egypt.

- Output: Elephants have large ears to help cool them down.

Contradictory sentence hallucinations: This occurs when an AI model generates a sentence that contradicts a previous statement within the same context.

- Prompt: Describe a sunny day.

- Output: The sun was shining brightly. It was a dark and stormy day.

Wrong association hallucinations: AI makes connections between concepts that are unrelated.

- Prompt: Discuss the causes of climate change.

- Output: The sun is a star, and stars twinkle in the night sky.

Suggested: Responsible use of AI unlocks its full potential, amplifying its value and providing an edge. Here is an example of how AI in investment management creates exciting opportunities.

What causes hallucinations in AI?

AI hallucinations can occur due to several reasons, including:

- Incomplete or ambiguous data: When an AI model is provided with incomplete or ambiguous data during training or inference, it may fill in the gaps or make assumptions that lead to hallucinations.

- Overfitting: If the AI model is overfitting the training data, it may start generating false information that is not present in the data but is memorized during training.

- Biases in data: Biases present in the training data can lead the AI model to generate hallucinations that reflect those biases, resulting in inaccurate or skewed outputs.

- Complexity of the model: Highly complex AI models, such as deep neural networks, may exhibit hallucinations due to the intricate connections and layers within the model, making it challenging to interpret how the model arrives at certain conclusions.

- Lack of contextual understanding: AI models may struggle to grasp the context or nuances of a situation, leading to the generation of irrelevant or nonsensical information.

- Adversarial attacks: Deliberate manipulation of the input data to deceive the AI model can also result in hallucinations.

It is crucial to be aware of these factors and exercise caution when relying on AI-generated outputs to mitigate the risk of hallucinations and inaccuracies.

AI hallucinations causes and solutions

| Cause | Description | Solution |

| Data quality | Poor quality data with noise, errors, biases, or inconsistencies | Use high-quality, domain-specific data and implement rigorous data cleaning processes. |

| Generation method | Biases in training methods or incorrect decoding | Employ advanced training techniques like RLHF and adversarial training |

| Input context | Ambiguous or contradictory prompts | Ensure clear and specific inputs for the AI model. |

| Lack of fine-tuning | Generic models not tailored to specific tasks | Fine-tune models with focused, task-specific datasets. |

| Insufficient error detection | Lack of mechanisms to identify and correct hallucinations during training | Implement robust error detection and correction during training phases. |

| Absence of expert guidance | Inadequate oversight from AI specialists | Engage AI consultants for tailored solutions and continuous improvement practices. |

How to fix AI hallucinations?

Addressing AI hallucinations involves a combination of technical interventions and expert guidance. One primary approach is fine-tuning AI models with high-quality, domain-specific data. Fine-tuning allows models to adjust to specific tasks and contexts, reducing the likelihood of generating irrelevant or incorrect outputs. This process involves retraining a pre-existing model on a smaller, more focused dataset, which helps the AI understand and generate more accurate responses relevant to a particular application.

Another critical aspect is enhancing the AI model’s training methodology. Techniques such as reinforcement learning from human feedback (RLHF) can significantly improve the model’s reliability. In RLHF, human evaluators review the AI’s outputs and provide feedback, which is then used to adjust the model’s parameters. Additionally, implementing robust error detection and correction mechanisms during the AI’s training phase can help identify and rectify potential hallucinations before they become problematic in real-world applications.

Engaging an AI consultant can be invaluable in this context. These experts can assess the specific needs and challenges of a business, tailoring solutions that enhance AI model performance and reliability. An AI consultant can guide the integration of advanced techniques, such as adversarial training, which exposes the AI model to challenging scenarios to improve its robustness. Moreover, they can help establish best practices for continuous monitoring and model evaluation, ensuring that the AI system remains accurate and trustworthy over time.

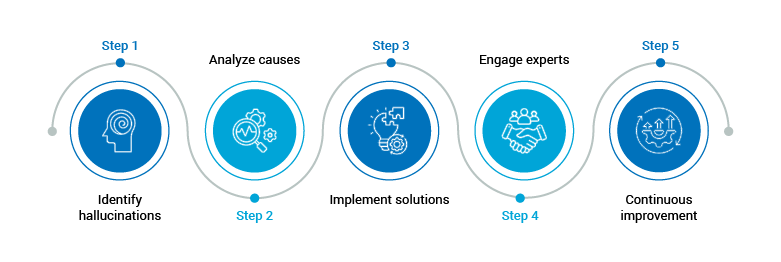

Steps to fix AI hallucinations

1. Identify hallucinations

- Monitor AI outputs regularly.

- Use automated tools to detect inconsistencies.

2. Analyze causes

- Evaluate data quality.

- Assess training methods,

- Review input contexts.

3. Implement solutions

- Fine-tune models with domain-specific data.

- Apply reinforcement learning from human feedback (RLHF).

- Introduce adversarial training for robustness.

4. Engage experts

- Consult with AI specialists.

- Implement tailored solutions.

- Establish continuous monitoring and feedback mechanisms.

5. Continuous improvement

- Regularly update training data.

- Refine error detection and correction methods.

- Adapt to new challenges and scenarios.

How do we build trust with AI and develop a forward-looking perspective?

This is not just a question, but a crucial task that demands our immediate attention and action. Organizations must prioritize building trust in AI systems, as this is a key factor in successful AI implementation. By actively mitigating AI hallucinations and implementing best practices for AI, businesses can unlock the transformative potential of AI while safeguarding against its pitfalls. Embracing responsible AI practices today can lead your organization toward a future where technology and trust go hand in hand.

Incorporating these strategies not only lessens risks but also positions companies to harness AI’s full potential responsibly. By fostering a culture of continuous feedback and improvement, businesses can maintain the integrity and reliability of their AI systems, ultimately driving innovation and building lasting trust with stakeholders.