In today’s data-driven world, the amount of data being generated, captured and consumed by organizations across the world has increased tremendously. Also, since the onset of the COVID-19 pandemic, as the business world has turned towards remote work models, data analytics has become significant for organizations.

The pandemic has changed everything, bringing enterprises to a new podium, where they require to integrate data from different sources and systems together. Thus, a massive amount of data that gets generated needs to be integrated and curated to make it available in real-time across the organization. This is where the role of data integration comes into the picture.

And one of the most powerful tools to integrate data with more agility and efficiency is – Azure Data Factory (ADF). This blog post will give you insights into how Azure Data Factory can help enterprises in data integration.

Enterprise data integration simplified by ADF

Azure Data Factory is a data integration service that enables you to shift, process and store a huge amount of data in any storage technology. Using ADF, you can create data-driven workflows (called pipelines) and schedule them to ingest all your data from disparate sources, whether it is on Azure, on-premises, or the cloud. And to let you acquire data from various sources, ADF offers ninety built-in, maintenance-free connectors. Thus, Azure Data Factory can help you drive more value and make informed business decisions.

The key features of Azure Data Factory are:

- Pay-as-you-go model

- Autonomous ETL/ELT

- Resource consistency

- Cost-effectiveness

1. On-premises data integration

Azure Data Factory enables you to create a modernized data warehouse. It helps to transform and analyze on-premises data in a code-free manner. ADF uses connectors to connect all data from on-premises data sources such as databases, file shares, etc. and orchestrate it at scale. Thus, on-premises data can be combined with additional log data.

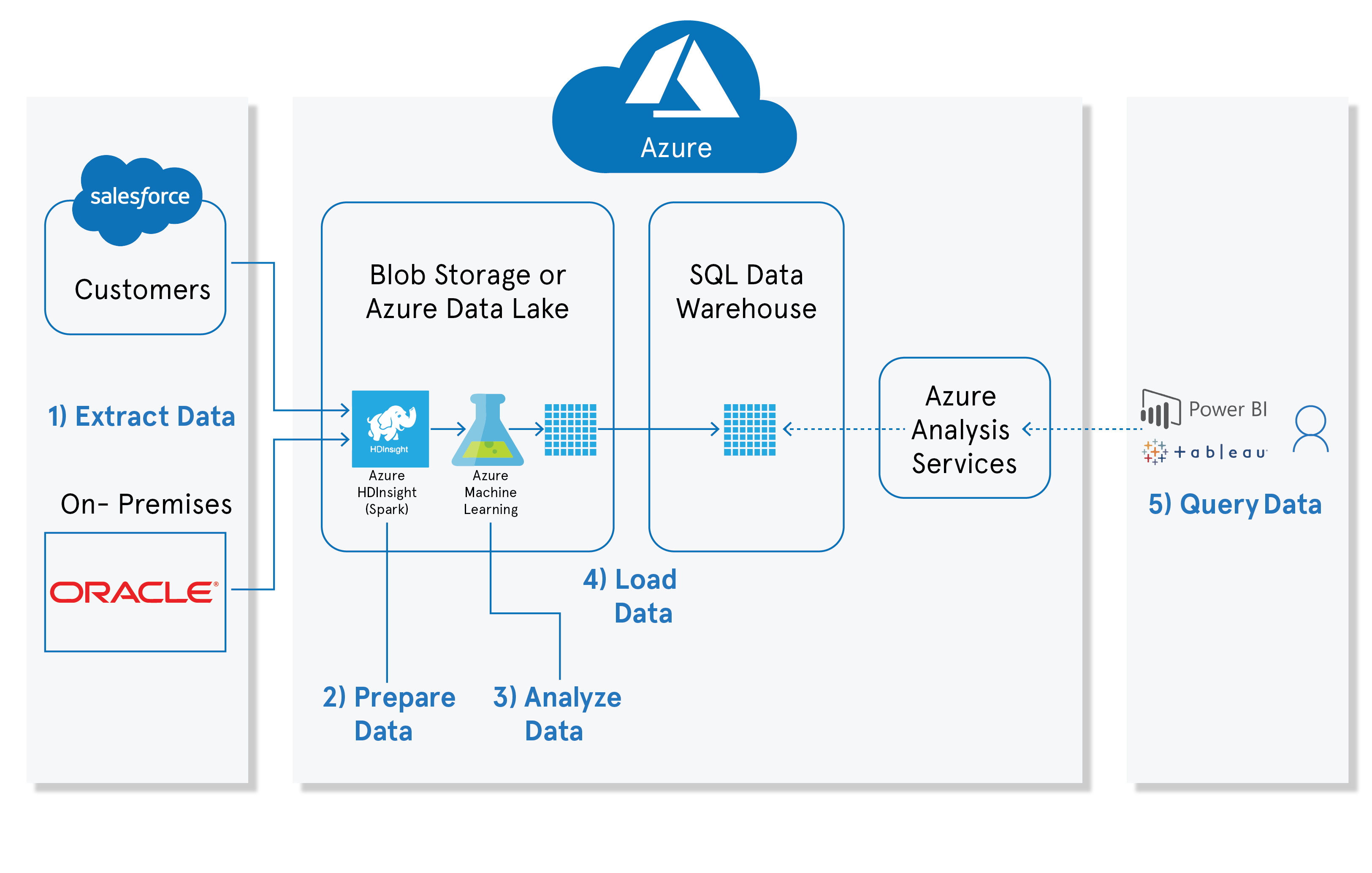

For on-premises data integration, ADF performs the following steps:

- It extracts various types of on-premises data from different sources and stores it in a data lake.

- Then, it deletes the duplicate data, processes it and converts the data type to the required form.

- If needed, the next step is to apply machine learning to get rich and powerful data insights.

- In the end, the aggregated data can be into relational databases for analysis.

2. Cloud data integration

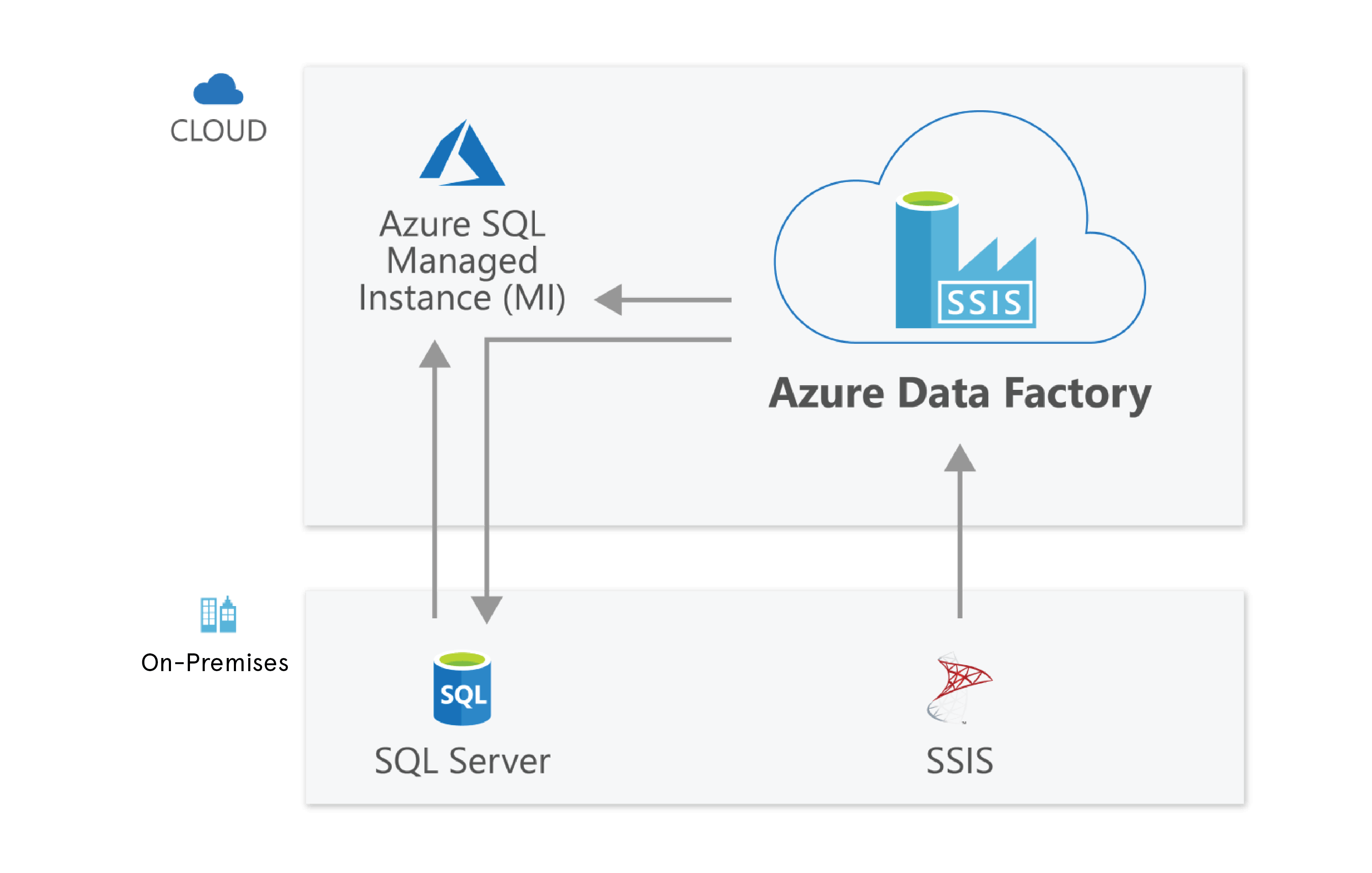

It is a great way for you to consider ADF’s cloud-first approach for your organization’s data integration needs. Azure Data Factory is itself a managed cloud service that allows you to create and run pipelines in the cloud. You can even migrate existing SSIS (SQL Server Integration Services) workloads to the ADF. It also enables you to run SSIS packages on Azure, letting you access both the cloud and on-premises data services. ADF connectors will let you connect your data to different and multiple cloud data sources, including SaaS applications. It enables you to automate the data integration process throughout your modern cloud-based data warehouse using ADF pipelines.

ADF integration with different tools

- ADF integration with DevOps

You can integrate Azure Data Factory with Azure DevOps Git to enable Continuous Integration/Continuous Deployment, source control and release management. There are two ways in which you can deploy Azure Data Factory with DevOps.

1. ARM Template: Using this Microsoft approach, you can deploy ADF with DevOps by creating or updating ARM template files. Also, using Azure DevOps, you can build your own CI/CD process for ADF deployment.

2. JSON files: Using this custom approach, you can deploy ADF with DevOps from the code i.e JASON files via REST API. This is the direct deployment approach.

- ADF integration with Power BI

You can integrate Azure Data Factory with Power BI that will enable you to visualize data and feed the data automatically to external platforms. Power BI uses REST APIs for basic authentication to import your data to Power BI. It also enables you to programmatically refresh your data. Integrating ADF with Power BI will also help you to monitor different stages of the ADF pipeline, datasets and activities.

- ADF integration with HDInsight

Azure Data Factory automatically creates an on-demand HDInsight Hadoop cluster that enables you to process an input data slice. On the completion of data processing, this cluster gets deleted automatically. HDInsight is ADF’s data transformation activities that can process big data at scale.

Conclusion

In the world of big data, organizations need to embrace the new approaches to data analytics that can help them to refine enormous stores of diverse data into actionable business insights. Azure Data Factory is one of the powerful cloud services that modern enterprises are using for data integration. If you want to explore a range of data integration capabilities using ADF, get in touch with our data experts.